Whereas social media firms court docket criticism with who they select to ban, tech ethics consultants say the extra vital perform these firms management occurs behind the scenes in what they advocate.

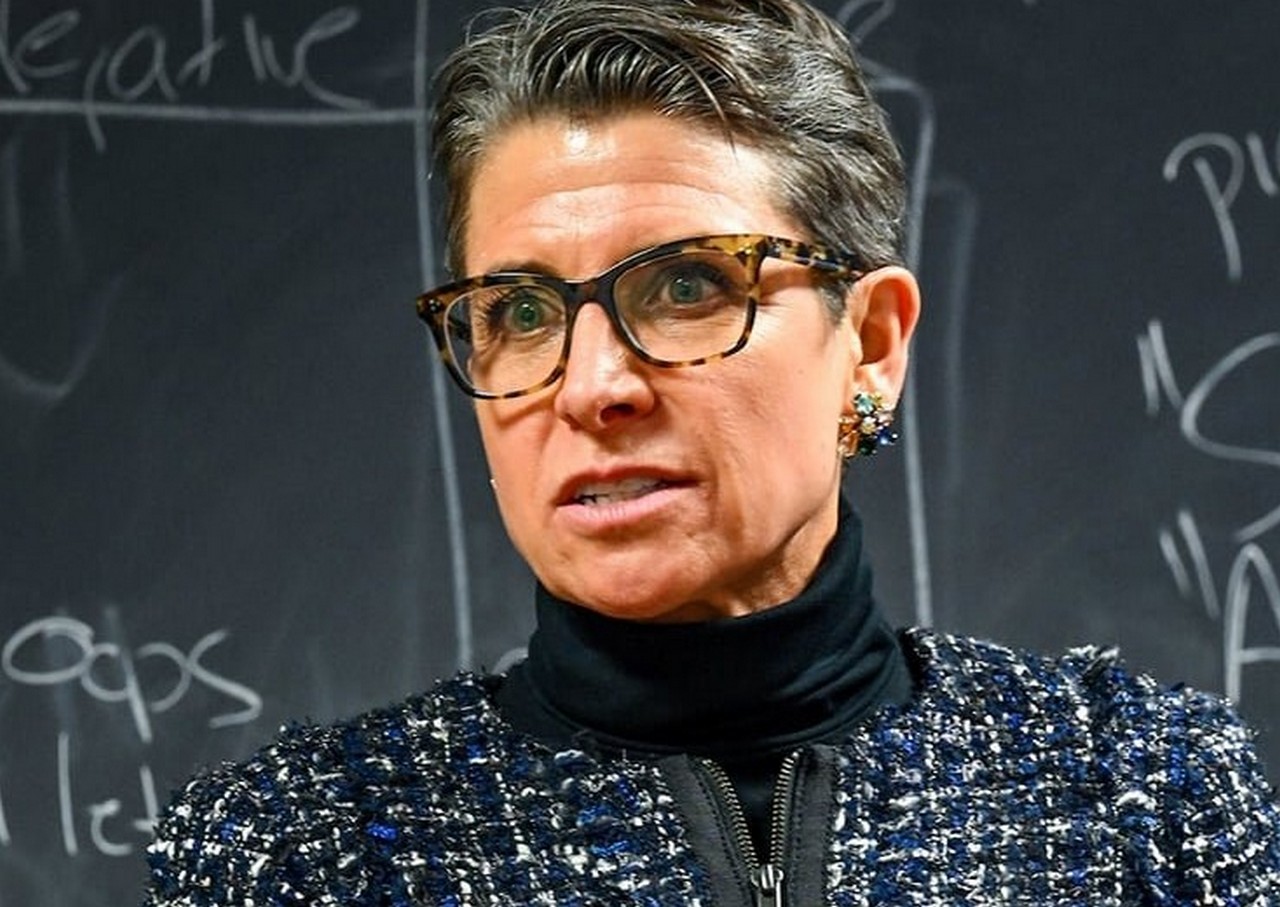

Kirsten Martin, director of the Notre Dame Expertise Ethics Middle (ND TEC), argues that optimizing suggestions based mostly on a single issue—engagement—is an inherently value-laden resolution.

Human nature could also be fascinated by and drawn to probably the most polarizing content material—we will not look away from a prepare wreck. However there are nonetheless limits. Social media platforms like Fb and Twitter continuously wrestle to search out the appropriate steadiness between free speech and moderation, she says.

“There’s a level the place individuals go away the platform,” Martin says. “Completely unmoderated content material, the place you may say as terrible materials as you need, there is a motive why individuals do not flock to it. As a result of whereas it looks as if a prepare wreck after we see it, we do not need to be inundated with it on a regular basis. I feel there’s a pure pushback.”

Elon Musk’s latest adjustments at Twitter have reworked this debate from a tutorial train right into a real-time take a look at case. Musk could have thought the query of whether or not to ban Donald Trump was central, Martin says. A single govt can resolve a ban, however selecting what to advocate takes know-how like algorithms and synthetic intelligence—and other people to design and run it.

“The factor that is completely different proper now with Twitter is eliminating all of the individuals that truly did that,” Martin says. “The content material moderation algorithm is barely nearly as good because the people who labeled it. For those who change the individuals which might be making these selections or for those who eliminate them, then your content material moderation algorithm goes to go stale, and pretty shortly.”

Martin, an knowledgeable in privateness, know-how and enterprise ethics and the William P. and Hazel B. White Middle Professor of Expertise Ethics within the Mendoza School of Enterprise, has intently analyzed content material promotion. Cautious of criticism over on-line misinformation earlier than the 2016 presidential election, she says, social media firms put up new guardrails on what content material and teams to advocate within the runup to the 2020 election.

Fb and Twitter have been consciously proactive in content material moderation however stopped after the polls closed. Martin says Fb “thought the election was over” and knew its algorithms have been recommending hate teams however did not cease as a result of “that sort of fabric received a lot engagement.” With greater than 1 billion customers, the affect was profound.

Martin wrote an article about this matter in a case research textbook (“Ethics of Information and Analytics”) she edited, revealed in 2022. In “Recommending an Riot: Fb and Advice Algorithms,” she argues that Fb made acutely aware selections to prioritize engagement as a result of that was their chosen metric for achievement.

“Whereas the takedown of a single account could make headlines, the delicate promotion and suggestion of content material drove consumer engagement,” she wrote. “And, as Fb and different platforms came upon, consumer engagement didn’t at all times correspond with the perfect content material.” Fb’s personal self-analysis discovered that its know-how led to misinformation and radicalization. In April 2021, an inner report at Fb discovered that “Fb didn’t cease an influential motion from utilizing its platform to delegitimize the election, encourage violence, and assist incite the Capitol riot.”

A central query is whether or not the issue is the fault of the platform or platform customers. Martin says this debate inside the philosophy of know-how resembles the battle over weapons, the place some individuals blame the weapons and others the individuals who use them for hurt. “Both the know-how is a impartial clean slate, or on the opposite finish of the spectrum, know-how determines every part and nearly evolves by itself,” she says. “Both approach, the corporate that is both shepherding this deterministic know-how or blaming it on the customers, the corporate that truly designs it has really no accountability in any way.

“That is what I imply by firms hiding behind this, nearly saying, ‘Each the method by which the selections are made and likewise the choice itself are so black boxed or very impartial that I am not answerable for any of its design or final result.'” Martin rejects each claims.

An instance that illustrates her conviction is Fb’s promotion of tremendous customers, individuals who submit materials continuously. The corporate amplified tremendous customers as a result of that drove engagement, even when these customers tended to incorporate extra hate speech. Suppose Russian troll farms. Laptop engineers found this development and proposed fixing it by tweaking the algorithm. Leaked paperwork have proven that the corporate’s coverage store overruled the engineers as a result of they feared a success on engagement. Additionally, they feared being accused of political bias as a result of far-right teams have been usually tremendous customers.

One other instance in Martin’s textbook options an Amazon driver fired after 4 years of delivering packages round Phoenix. He acquired an automatic e mail as a result of the algorithms monitoring his efficiency “determined he wasn’t doing his job correctly.”

The corporate was conscious that delegating the firing resolution to machines might result in errors and damaging headlines, “however determined it was cheaper to belief the algorithms than to pay individuals to analyze mistaken firings as long as the drivers may very well be changed simply.” Martin as a substitute argues that acknowledging the “value-laden biases of know-how” is important to protect the power of people to regulate the design, growth and deployment of that know-how.

The talk over on-line content material moderation traces again to Part 230 of the Communications Decency Act. In 1995, a web based platform was sued for defamation over a consumer’s submit on its bulletin board. The go well with was profitable partly as a result of the platform tried to take away dangerous content material, implying that moderation led to full accountability, which discouraged any makes an attempt.

In response, Congress handed Part 230 to guard the platform enterprise mannequin and encourage self-moderation. “The concept is even for those who get in there and attempt to reasonable it, we’re not going to deal with you want a newspaper,” Martin says. “You are not going to be held answerable for the content material that is in your website.”

Social media firms have been profitable and proactive about some forms of moderation. When there’s a consensus towards a kind of content material, corresponding to little one porn or copyrighted materials, they’re fast to take away it. The issues begin when there’s widespread debate over what’s dangerous.

In powerful circumstances, the businesses typically use Part 230 to take a hands-off strategy, claiming that solely extra content material can overwhelm lies. Martin says they argue that there isn’t a approach to do any higher: “For those who regulate us, we will exit of enterprise.”

She compares this to Normal Motors within the Nineteen Seventies asserting that they could not put seat belts in automobiles to make them safer, or metal firms claiming that they could not keep away from air pollution.

“It is form of this regular evolution of an trade rising actually shortly with out an excessive amount of regulation or an excessive amount of thought,” Martin says. “Folks push again and say, ‘Hey, we wish one thing completely different.’ They will come round. GM added seat belts. Finally, we’ll have higher social media firms.”

Martin could also be optimistic as a result of she’s a fan of know-how.

“It isn’t as if the people who critique social media need it to go away,” she says. “They need it to meet every part that it may be. They just like the constructive facet.”

Social media can join kindred souls with a specialised curiosity or individuals who really feel lonely. That was particularly helpful throughout the pandemic. Folks residing underneath totalitarian regimes can talk shortly and simply, avoiding authorities management of older know-how.

Within the early 2010s, Fb was seen as a robust drive of freedom throughout the Arab Spring, when protesters organized on-line and toppled authoritarian leaders in North Africa and the Center East.

Introduced dwelling, that energy can even have a draw back, relying on perspective. “If I can talk with another person who’s identical to me, which means if I need to plan an rebel, I can discover another person that desires to plan an rebel,” Martin says.

“Loads of instances, the individuals which might be loud with hate speech have followers that see it as a name to arms,” Martin says. “It isn’t only one particular person, like Alex Jones saying to Sandy Hook victims, ‘You do not exist and that by no means occurred.’ It is that there is hundreds of individuals that can then additionally goal these individuals on-line and offline. I feel it is misunderstanding the asymmetry of the bullies.”

Current phenomena, from Fb’s COVID-19 disinformation to Instagram’s impact on youngsters’ physique picture, could have soured some individuals on social media since its early promise. Martin identifies Gamergate in 2014 because the impetus of change in many individuals’s notion. A loosely organized on-line harassment marketing campaign focused feminism and variety in online game tradition, spawning most of the worst behaviors which have adopted.

Martin says social media customers should demand moderation when hate speech goes too far. Advertisers could be one other highly effective drive. “Manufacturers don’t desire the brand new electrical Cadillac to be proper subsequent to a white supremacist submit,” she says.

Musk could care extra about speech he desires to advertise than focused teams proper now, however that selection might open the door to a competitor like Mastodon. For her half, Martin will proceed to establish the issues and belief that know-how can right course to supply a social profit.

“I at all times consider tech ethics as having two prongs,” she says. “One is a vital analysis examination of the know-how. However the different facet is to assist individuals determine learn how to design and develop higher know-how. The talent set to do each aren’t essentially in the identical particular person.

“You want individuals which might be calling out what’s mistaken and you then want different individuals to say, ‘Oh, I might repair that and that is how I might do it in another way.'”

Supplied by

College of Notre Dame

Quotation:

Professional promotes steadiness of moderation and engagement in know-how ethics (2023, February 15)

retrieved 22 March 2023

from https://techxplore.com/information/2023-02-expert-moderation-engagement-technology-ethics.html

This doc is topic to copyright. Other than any honest dealing for the aim of personal research or analysis, no

half could also be reproduced with out the written permission. The content material is supplied for data functions solely.